Video scene detection is a critical task in video understanding, enabling the segmentation of videos into semantically meaningful parts. This technology is foundational in various applications such as content-based video retrieval, video summarization, and automated video editing.

In this article, we'll explore the fundamentals of video scene detection, provide example queries, and walk through a tutorial on how to replicate results.

Fundamentals of Video Scene Detection

Video scene detection involves identifying boundaries between scenes, which can be defined as a series of frames that depict a continuous action or theme. Traditional approaches relied heavily on low-level features such as color histograms, edge detection, and optical flow. Modern methods, however, utilize deep learning models that can capture high-level semantic information.

Mixpeek's Approach to Scene Detection

Mixpeek provides an API that allows developers to easily implement video scene detection. Using the mixpeek/vuse-generic-v1 model, you can quickly embed and analyze videos to detect scenes.

More about vuse-generic-v1: https://learn.mixpeek.com/vuse-v1-release/

In the examples below, we've already indexed 250 videos of various lengths from social media platforms.

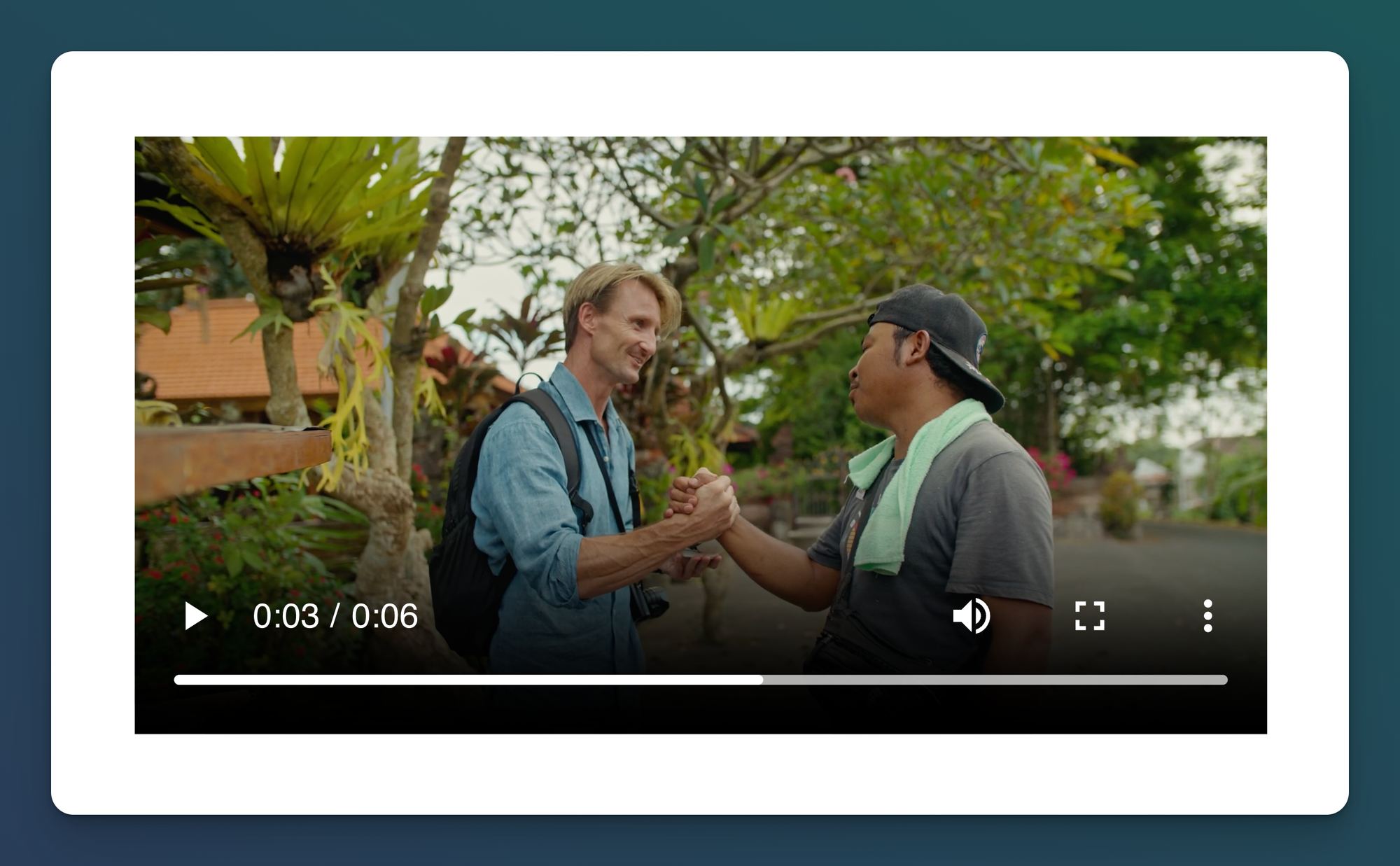

Example 1: Detecting Scenes with Handshakes Outdoors

In this query, we aim to identify scenes in a video where two people are shaking hands outdoors. This can be particularly useful for organizations or media companies that need to catalog and retrieve footage of professional or formal interactions, such as business agreements, greetings at events, or diplomatic meetings.

https://mixpeek.com/video?q=two+people+handshaking+outside

# embed our query

query = "two people handshaking outside"

query_embedding = mixpeek.embed(query, "mixpeek/vuse-generic-v1")

# do a KNN search

results = mongodb.videos.search(query_embedding)This query can be applied in various scenarios:

- Corporate Video Analysis: Companies can use this to analyze footage from corporate events, identifying key moments of interaction that may be relevant for promotional materials or internal reviews.

- News and Media: Media organizations can use this to quickly find and compile footage of important meetings or events, enhancing their reporting capabilities.

- Security and Surveillance: Security teams can use this to monitor interactions in outdoor environments, helping to identify and review significant events.

ROI Outcome

By automating the detection of such specific interactions, organizations can save time and resources that would otherwise be spent manually reviewing footage. This improves operational efficiency and ensures that key moments are not missed, providing significant value in terms of both time savings and accuracy in content management.

Example 2: Detecting Scenes with Group Dancing on a Train

In this query, we aim to identify scenes in a video where a group of people is dancing on a train. This can be particularly useful for entertainment companies, event organizers, or content creators who need to catalog and retrieve footage of unique and creative performances, such as flash mobs, music videos, or promotional stunts.

https://mixpeek.com/video?q=group+dancing+on+a+train

# embed our query

query = "group dancing on a train"

query_embedding = mixpeek.embed(query, "mixpeek/vuse-generic-v1")

# do a KNN search

results = mongodb.videos.search(query_embedding)This query can be applied in various scenarios:

- Entertainment Industry: Film and television studios can use this to quickly find and compile footage of dance performances, enhancing their production capabilities and speeding up the editing process.

- Event Documentation: Event organizers can use this to catalog and showcase creative performances that occurred during their events, providing valuable content for marketing and promotional purposes.

- Content Creation: Social media influencers and content creators can use this to identify and feature unique dance performances, improving their content variety and engagement with their audience.

ROI Outcome

By automating the detection of such specific and unique performances, organizations can save time and resources that would otherwise be spent manually reviewing footage. This enhances operational efficiency and ensures that creative and memorable moments are not overlooked, providing significant value in terms of both time savings and accuracy in content management.

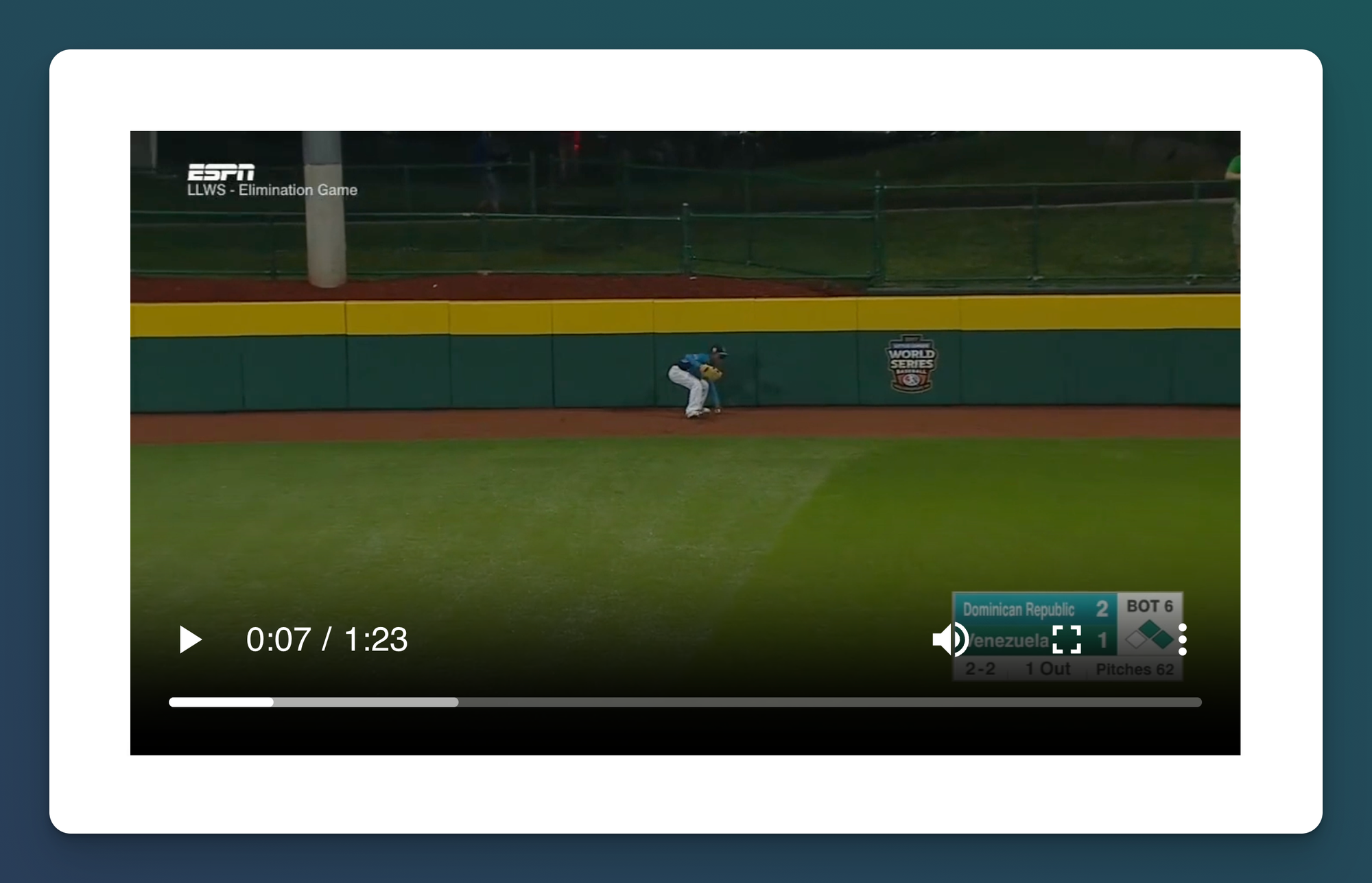

Example 3: Detecting Scenes with a Baseball Player Catching a Ball in the Outfield

In this query, we aim to identify scenes in a video where a baseball player catches a ball in the outfield. This can be particularly useful for sports analysts, broadcasters, and fans who need to catalog and retrieve footage of key defensive plays in baseball games.

https://mixpeek.com/video?q=baseball+player+catching+a+ball+in+the+outfield

# embed our query

query = "baseball player catching a ball in the outfield"

query_embedding = mixpeek.embed(query, "mixpeek/vuse-generic-v1")

# do a KNN search

results = mongodb.videos.search(query_embedding)This query can be applied in various scenarios:

- Sports Analysis: Analysts and coaches can use this to review key defensive plays, helping to evaluate player performance and develop strategies.

- Broadcasting: Sports broadcasters can quickly find and highlight significant defensive moments, enhancing their game recaps and highlight reels.

- Fan Engagement: Fans and social media platforms can use this to identify and share exciting moments from baseball games, increasing engagement and viewership.

ROI Outcome

By automating the detection of key defensive plays in baseball, organizations can save time and resources that would otherwise be spent manually reviewing footage. This enhances operational efficiency and ensures that important moments are not missed, providing significant value in terms of both time savings and accuracy in content management. For broadcasters, it means quicker and more engaging content delivery; for analysts, it translates to better performance insights; and for fans, it provides immediate access to exciting highlights.

Building Your Index for Video Scene Detection

This section will walk you through the process of building the video index using the Mixpeek SDK. We'll use the provided pipeline to split videos into chunks, generate embeddings, and extract metadata, descriptions, and tags.

There is a one-click template available here: https://mixpeek.com/templates/semantic-video-understanding

Create your Source & Destination Connections

First you'll need to create the source connection (typically object storage like S3) and destination connection (a vector database like MongoDB).

Once done, you can create a pipeline that processes each new object in your S3 bucket according to the logic. Here's the example below:

from mixpeek import Mixpeek, FileTools, SourceS3

def handler(event, context):

mixpeek = Mixpeek("API_KEY")

file_url = SourceS3.file_url(event['bucket'], event['key'])

# Split video into chunks based on the specified frame interval

video_chunks = FileTools.split_video(file_url, frame_interval=10)

# Initialize a list to hold the full video metadata

full_video = []

# Process each video chunk

for chunk in video_chunks:

obj = {

"embedding": mixpeek.embed.video(chunk, "mixpeek/vuse-generic-v1"),

"tags": mixpeek.extract.video(chunk, ""),

"description": mixpeek.extract.video(chunk, ""),

"file_url": file_url,

"parent_id": FileTools.uuid(),

"metadata": {

"time_start": chunk.start_time,

"time_end": chunk.end_time,

"duration": chunk.duration,

"fps": chunk.fps,

"video_codec": chunk.video_codec,

"audio_codec": chunk.audio_codec

}

}

full_video.append(obj)

return full_video

Once you have this code ready, create an account on https://mixpeek.com/start and link your Github. We'll create a private repository called mixpeek_pipelines and you'll edit the pipeline code for each file where the filename is pipeline.py.

The response from every pipeline via the code above is the atomic, real-time insertion into your database.

Explanation of Key Components

- Mixpeek Instance: Initializes the Mixpeek client with your API key.

- SourceS3.file_url: Generates the URL for accessing the video file in the S3 bucket.

- FileTools.split_video: Splits the video into chunks based on the specified frame interval. This allows for more granular processing and indexing.

- Embeddings and Metadata Extraction: For each video chunk, the code generates an embedding using the

mixpeek.embed.videofunction, and extracts tags and descriptions usingmixpeek.extract.video. - Metadata: Includes additional details about the video chunk such as start and end times, duration, frames per second (fps), video codec, and audio codec.

Benefits of Building a Vector Index

- Enhanced Searchability: Creating a vector index allows for efficient and accurate searches across video content, enabling you to quickly find specific scenes or moments.

- Improved Metadata Management: By extracting and storing detailed metadata, you can provide richer search results and better content management.

- Scalability: The process can be automated and scaled to handle large volumes of video content, ensuring your index stays up-to-date with new uploads.

By following these steps, you can build a comprehensive vector index of your video content, leveraging the power of Mixpeek to enhance your video understanding and retrieval capabilities.